A.I. Begins Ushering In an Age of Killer Robots

for the New York Times - text by Paul Mozur and Adam Satariano - published on July 2, 2024

In a field on the outskirts of Kyiv, the founders of Vyriy, a Ukrainian drone company, were recently at work on a weapon of the future.

To demonstrate it, Oleksii Babenko, 25, Vyriy’s chief executive, hopped on his motorcycle and rode down a dirt path. Behind him, a drone followed, as a colleague tracked the movements from a briefcase-size computer.

Until recently, a human would have piloted the quadcopter. No longer. Instead, after the drone locked onto its target — Mr. Babenko — it flew itself, guided by software that used the machine’s camera to track him.

The motorcycle’s growling engine was no match for the silent drone as it stalked Mr. Babenko. “Push, push more. Pedal to the metal, man,” his colleagues called out over a walkie-talkie as the drone swooped toward him. “You’re screwed, screwed!”

If the drone had been armed with explosives, and if his colleagues hadn’t disengaged the autonomous tracking, Mr. Babenko would have been a goner.

Vyriy is just one of many Ukrainian companies working on a major leap forward in the weaponization of consumer technology, driven by the war with Russia. The pressure to outthink the enemy, along with huge flows of investment, donations and government contracts, has turned Ukraine into a Silicon Valley for autonomous drones and other weaponry.

What the companies are creating is technology that makes human judgment about targeting and firing increasingly tangential. The widespread availability of off-the-shelf devices, easy-to-design software, powerful automation algorithms and specialized artificial intelligence microchips has pushed a deadly innovation race into uncharted territory, fueling a potential new era of killer robots.

To demonstrate it, Oleksii Babenko, 25, Vyriy’s chief executive, hopped on his motorcycle and rode down a dirt path. Behind him, a drone followed, as a colleague tracked the movements from a briefcase-size computer.

Until recently, a human would have piloted the quadcopter. No longer. Instead, after the drone locked onto its target — Mr. Babenko — it flew itself, guided by software that used the machine’s camera to track him.

The motorcycle’s growling engine was no match for the silent drone as it stalked Mr. Babenko. “Push, push more. Pedal to the metal, man,” his colleagues called out over a walkie-talkie as the drone swooped toward him. “You’re screwed, screwed!”

If the drone had been armed with explosives, and if his colleagues hadn’t disengaged the autonomous tracking, Mr. Babenko would have been a goner.

Vyriy is just one of many Ukrainian companies working on a major leap forward in the weaponization of consumer technology, driven by the war with Russia. The pressure to outthink the enemy, along with huge flows of investment, donations and government contracts, has turned Ukraine into a Silicon Valley for autonomous drones and other weaponry.

What the companies are creating is technology that makes human judgment about targeting and firing increasingly tangential. The widespread availability of off-the-shelf devices, easy-to-design software, powerful automation algorithms and specialized artificial intelligence microchips has pushed a deadly innovation race into uncharted territory, fueling a potential new era of killer robots.

Viriy team at the FPV drone testing outside of Kyiv

The most advanced versions of the technology that allows drones and other machines to act autonomously have been made possible by deep learning, a form of A.I. that uses large amounts of data to identify patterns and make decisions. Deep learning has helped generate popular large language models, like OpenAI’s GPT-4, but it also helps make models interpret and respond in real time to video and camera footage. That means software that once helped a drone follow a snowboarder down a mountain can now become a deadly tool.

In more than a dozen interviews with Ukrainian entrepreneurs, engineers and military units, a picture emerged of a near future when swarms of self-guided drones can coordinate attacks and machine guns with computer vision can automatically shoot down soldiers. More outlandish creations, like a hovering unmanned copter that wields machine guns, are also being developed.

The weapons are cruder than the slick stuff of science-fiction blockbusters, like “The Terminator” and its T-1000 liquid-metal assassin, but they are a step toward such a future. While these weapons aren’t as advanced as expensive military-grade systems made by the United States, China and Russia, what makes the developments significant is their low cost — just thousands of dollars or less — and ready availability.

Except for the munitions, many of these weapons are built with code found online and components such as hobbyist computers, like Raspberry Pi, that can be bought from Best Buy and a hardware store. Some U.S. officials said they worried that the abilities could soon be used to carry out terrorist attacks.

For Ukraine, the technologies could provide an edge against Russia, which is also developing autonomous killer gadgets — or simply help it keep pace. The systems raise the stakes in an international debate about the ethical and legal ramifications of A.I. on the battlefield. Human rights groups and United Nations officials want to limit the use of autonomous weapons for fear that they may trigger a new global arms race that could spiral out of control.

In Ukraine, such concerns are secondary to fighting off an invader.

Mykhailo Fedorov during a symposium announcing a global fundraising campaign with the United24, a governmental fundraising platform, to raise money for robotics during the war with Russia

“We need maximum automation,” said Mykhailo Fedorov, Ukraine’s minister of digital transformation, who has led the country’s efforts to use tech start-ups to expand advanced fighting capabilities. “These technologies are fundamental to our victory.”

Autonomous drones like Vyriy’s have already been used in combat to hit Russian targets, according to Ukrainian officials and video verified by The New York Times. Mr. Fedorov said the government was working to fund drone companies to help them rapidly scale up production.

Major questions loom about what level of automation is acceptable. For now, the drones require a pilot to lock onto a target, keeping a “human in the loop” — a phrase often invoked by policymakers and A.I. ethicists. Ukrainian soldiers have raised concerns about the potential for malfunctioning autonomous drones to hit their own forces. In the future, constraints on such weapons may not exist.

Ukraine has “made the logic brutally clear of why autonomous weapons have advantages,” said Stuart Russell, an A.I. scientist and professor at the University of California, Berkeley, who has warned about the dangers of weaponized A.I. “There will be weapons of mass destruction that are cheap, scalable and easily available in arms markets all over the world.”

A Drone Silicon Valley

In a ramshackle workshop in an apartment building in eastern Ukraine, Dev, a 28-year-old soldier in the 92nd Assault Brigade, has helped push innovations that turned cheap drones into weapons. First, he strapped bombs to racing drones, then added larger batteries to help them fly farther and recently incorporated night vision so the machines can hunt in the dark.

In May, he was one of the first to use autonomous drones, including those from Vyriy. While some required improvements, Dev said, he believed that they would be the next big technological jump to hit the front lines.

Autonomous drones are “already in high demand,” he said. The machines have been especially helpful against jamming that can break communications links between drone and pilot. With the drone flying itself, a pilot can simply lock onto a target and let the device do the rest.

Makeshift factories and labs have sprung up across Ukraine to build remote-controlled machines of all sizes, from long-range aircraft and attack boats to cheap kamikaze drones — abbreviated as F.P.V.s, for first-person view, because they are guided by a pilot wearing virtual-reality-like goggles that give a view from the drone. Many are precursors to machines that will eventually act on their own.

Dev, 92nd Brigade

Efforts to automate F.P.V. flights began last year, but were slowed by setbacks building flight control software, according to Mr. Fedorov, who said those problems had been resolved. The next step was to scale the technology with more government spending, he said, adding that about 10 companies were already making autonomous drones.

“We already have systems which can be mass-produced, and they're now extensively tested on the front lines, which means they’re already actively used,” Mr. Fedorov said.

Some companies, like Vyriy, use basic computer vision algorithms, which analyze and interpret images and help a computer make decisions. Other companies are more sophisticated, using deep learning to build software that can identify and attack targets. Many of the companies said they pulled data and videos from flight simulators and frontline drone flights.

One Ukrainian drone maker, Saker, built an autonomous targeting system with A.I. processes originally designed for sorting and classifying fruit. During the winter, the company began sending its technology to the front lines, testing different systems with drone pilots. Demand soared.

By May, Saker was mass-producing single-circuit-board computers loaded with its software that could be easily attached to F.P.V. drones so the machines could auto-lock onto a target, said the company’s chief executive, who asked to be referred to only by his first name, Viktor, for fear of retaliation by Russia.

A drone assembly and testing fascility in central Ukraine

The drone then crashes into its target “and that’s it,” he said. “It resists wind. It resists jamming. You just have to be precise with what you’re going to hit.”

Saker now makes 1,000 of the circuit boards a month and plans to expand to 9,000 a month by the end of the summer. Several of Ukraine’s military units have already hit Russian targets on the front lines with Saker’s technology, according to the company and videos confirmed by The Times.

In one clip of Saker technology shared on social media, a drone flies over a field scarred by shelling. A box at the center of the pilot’s viewfinder suddenly zooms in on a tank, indicating a lock. The drone attacks on its own, exploding into the side of the armor.

Saker has gone further in recent weeks, successfully using a reconnaissance drone that identified targets with A.I. and then dispatched autonomous kamikaze drones for the kill, Viktor said. In one case, the system struck a target 25 miles away.

“Once we reach the point when we don’t have enough people, the only solution is to substitute them with robots,” said Rostyslav, a Saker co-founder who also asked to be referred to only by his first name.

A Miniaturized War

On a hot afternoon last month in the eastern Ukrainian region known as the Donbas, Yurii Klontsak, a 23-year-old reservist, trained four soldiers to use the latest futuristic weapon: a gun turret with autonomous targeting that works with a PlayStation controller and a tablet.

Speaking over booms of nearby shelling, Mr. Klontsak explained how the gun, called Wolly after a resemblance to the Pixar robot WALL-E, can auto-lock on a target up to 1,000 meters away and jump between preprogrammed positions to quickly cover a broad area. The company making the weapon, DevDroid, was also developing an auto-aim to track and hit moving targets.

“When I first saw the gun, I was fascinated,” Mr. Klontsak said. “I understood this was the only way, if not to win this war, then to at least hold our positions.”

Yurii Klontsak, an instructor working with DevDroid is demonstrating how to control the Wolly, a gun turret with autonomous targeting

Yurii Klontsak, an instructor working with DevDroid is demonstrating how to control the Wolly, a gun turret with autonomous targeting

The gun is one of several that have emerged on the front lines using A.I.-trained software to automatically track and shoot targets. Not dissimilar to the object identification featured in surveillance cameras, software on a screen surrounds humans and other would-be targets with a digital box. All that’s left for the shooter to do is remotely pull the trigger with a video game controller.

For now, the gun makers say they do not allow the machine gun to fire without a human pressing a button. But they also said it would be easy to make one that could.

Many of Ukraine’s innovations are being developed to counter Russia’s advancing weaponry. Ukrainian soldiers operating machine guns are a prime target for Russian drone attacks. With robot weapons, no human dies when a machine gun is hit. New algorithms, still under development, could eventually help the guns shoot Russian drones out of the sky.

Such technologies, and the ability to quickly build and test them on the front lines, have gained attention and investment from overseas. Last year, Eric Schmidt, a former Google chief executive, and other investors set up a firm called D3 to invest in emerging battlefield technologies in Ukraine. Other defense companies, such as Helsing, are also teaming up with Ukrainian firms.

Ukrainian companies are moving more quickly than competitors overseas, said Eveline Buchatskiy, a managing partner at D3, adding that the firm asks the companies it invests in outside Ukraine to visit the country so they can speed up their development.

“There’s just a different set of incentives here,” she said.

Oleksandr Yabchanka with his fellow soldiers at a testing range with the Roboneers machine gun mounted on the UGV

Roboneers, a Ukrainian company, developed an automated weapon with a gun turret mounted on a rolling drone.

Often, battlefield demands pull together engineers and soldiers. Oleksandr Yabchanka, a commander in Da Vinci Wolves, a battalion known for its innovation in weaponry, recalled how the need to defend the “road of life” — a route used to supply troops fighting Russians along the eastern front line in Bakhmut — had spurred invention. Imagining a solution, he posted an open request on Facebook for a computerized, remote-controlled machine gun.

In several months, Mr. Yabchanka had a working prototype from a firm called Roboneers. The gun was almost instantly helpful for his unit.

“We could sit in the trench drinking coffee and smoking cigarettes and shoot at the Russians,” he said.

Mr. Yabchanka’s input later helped Roboneers develop a new sort of weapon. The company mounted the machine gun turret atop a rolling ground drone to help troops make assaults or quickly change positions. The application has led to a bigger need for A.I.-powered auto-aim, the chief executive of Roboneers, Anton Skrypnyk, said.

Anton Skrypnyk at the Robineers workshop in western Ukraine

Similar partnerships have pushed other advances. On a drone range in May, Swarmer, another local company, held a video call with a military unit to walk soldiers through updates to its software, which enables drones to carry out swarming attacks without a pilot.

The software from Swarmer, which was formed last year by a former Amazon engineer, Serhii Kupriienko, was built on an A.I. model that was trained with large amounts of data on frontline drone missions. It enables a single technician to operate up to seven drones on bombing and reconnaissance missions.

Recently, Swarmer added abilities that can guide kamikaze attack drones up to 35 miles. The hope is that the software, which has been in tests since January, will cut down on the number of people required to operate the miniaturized air forces that dominate the front lines.

During a demonstration, a Swarmer engineer at a computer watched a map as six autonomous drones buzzed overhead. One after the other, large bomber drones flew over a would-be target and dropped water bottles in place of bombs.

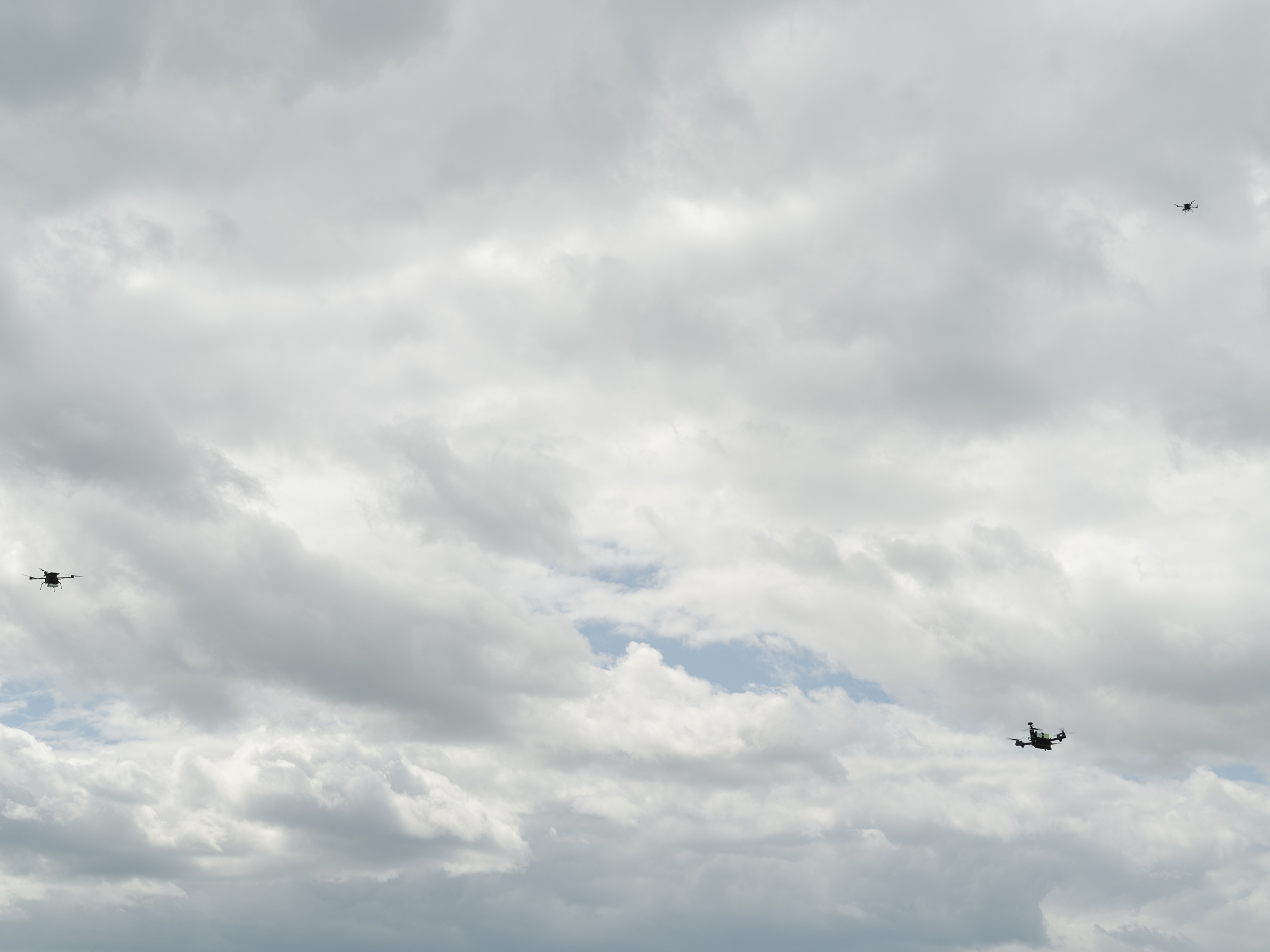

Swarmer field drone testing in Kyiv region

Some drone pilots are afraid they will be replaced entirely by the technology, Mr. Kupriienko said.

“They say: ‘Oh, it flies without us. They will take away our remote controls and put a weapon in our hand,’” he said, referring to the belief that it’s safer to fly a drone than occupy a trench on the front.

“But I say, no, you’ll now be able to fly with five or 10 drones at the same time,” he said. “The software will help them fight better.”

Swarmer drones during a field testing in Kyiv region

The Rise of Slaughterbots

In 2017, Mr. Russell, the Berkeley A.I. researcher, released an online film, “Slaughterbots,” warning of the dangers of autonomous weapons. In the movie, roving packs of low-cost armed A.I. drones use facial recognition technology to hunt down and kill targets.

What’s happening in Ukraine moves us toward that dystopian future, Mr. Russell said. He is already haunted, he said, by Ukrainian videos of soldiers who are being pursued by weaponized drones piloted by humans. There’s often a point when soldiers stop trying to escape or hide because they realize they cannot get away from the drone.

“There’s nowhere for them to go, so they just wait around to die,” Mr. Russell said.

He isn’t alone in fearing that Ukraine is a turning point. In Vienna, members of a panel of U.N. experts also said they worried about the ramifications of the new techniques being developed in Ukraine.

Officials have spent more than a decade debating rules about the use of autonomous weapons, but few expect any international deal to set new regulations, especially as the United States, China, Israel, Russia and others race to develop even more advanced weapons. In one U.S. program announced in August, known as the Replicator initiative, the Pentagon said it planned to mass-produce thousands of autonomous drones.

“The geopolitics makes it impossible,” said Alexander Kmentt, Austria’s top negotiator on autonomous weapons at the U.N. “These weapons will be used, and they’ll be used in the military arsenal of pretty much everybody.”

Nobody expects countries to accept an outright ban of such weapons, he said, “but they should be regulated in a way that we don’t end up with an absolutely nightmare scenario.”

Groups including the International Committee of the Red Cross have pushed for legally binding rules that prohibit certain types of autonomous weapons, restrict the use of others and require a level of human control over decisions to use force.

For many in Ukraine, the debate is academic. They are outgunned and outmanned.

“We need to win first,” Mr. Fedorov, the minister of digital transformation, said. “To do that, we will do everything we can to introduce automation to its maximum to save the lives of our soldiers.”